DEPLOYMENTS

Background

It is of no doubt that Automation in general is going to be the norm – automation of any manual process in any industry. For this post, we are going to narrow down to automation for a software project. There is quite a bit of jargon – CI/CD/CT, devops, continuous delivery and so on. Read this. Anyways, in the CI/CD pipeline , there are many models based on your application architecture, but one most popular out there is containers for eg. docker containers.

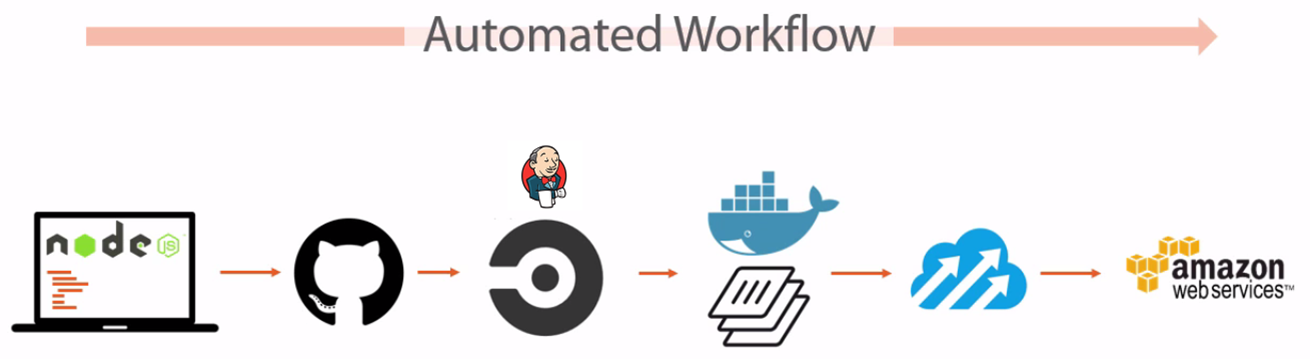

Below is a model that I saw in some forums where we can deploy a software change through a series of steps all the way through CI , build a container image, deploy the container image to AWS.

The flow is basically this

- A nodejs application change is pushed to github

- Github post commit hook kicks off a CI jobs that runs in circleci

- circleci runs unit tests and then triggers a build job preconfigured in dockerhub

- Dockerhub reads Dockerfile in root directory of source and builds the container image and then kicks calls a webhook on tutum (now dockercloud), which has a service and node cluster pre-configured

- Docker cloud is already integrated with AWS (credentials are configured) and can pick an AMI , instantiate it, bring up the docker engine on it , then take the previously created container image and start the container on that ec2 instance. Also the service in docker cloud will map a DNS entry so as to be able to access the application on a certain port

- So as you can see, a change in source code triggers a series of automated steps , that ultimately results in that change being deployed to production (i.e. if you use aws as production)

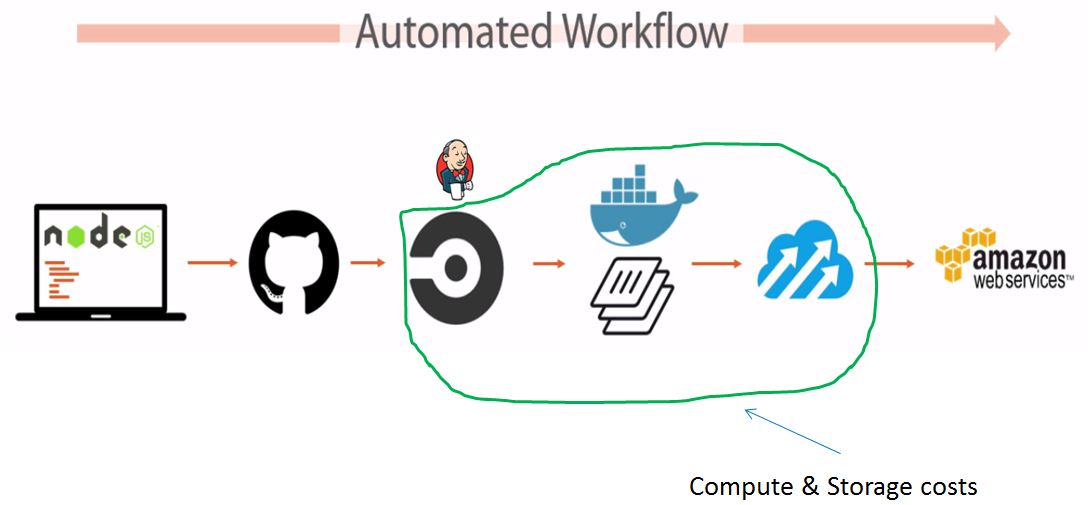

As you can see, all the compute, storage and network is on public cloud – you dint have to think about on-premises at all, which is great to know that we can have CI/CD pipeline completely in the cloud.

However , what if we already had some pieces in place on-premises that we would want to leverage and NOT have to pay for CircleCI, dockerhub, docker cloud etc.

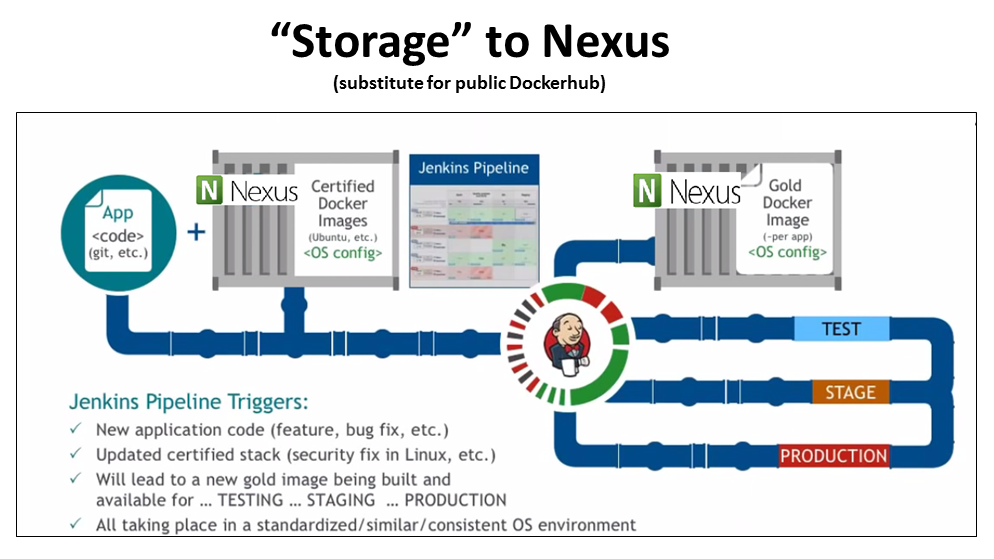

A common pattern

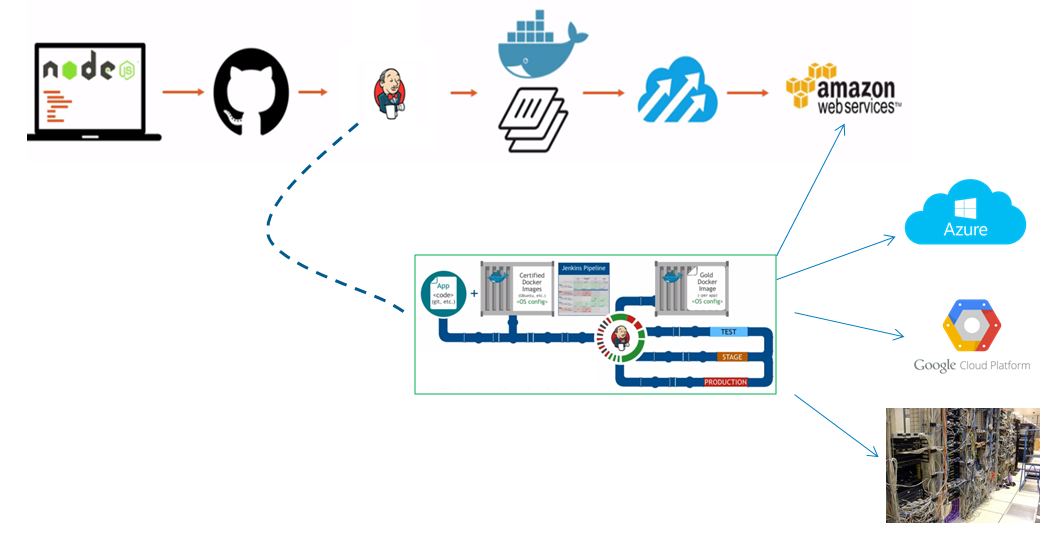

One of the most common set of tools used in many enterprise organizations that plan to adopt more open source and CI/CD is to use Jenkins, Nexus , Sonarqube etc. combination. So in the above diagram, the below holds.

So let’s see how we can leverage existing infra/platform that we already built and not have to incur extra costs. Specifically how we can leverage Jenkins and Sonatype Nexus.

So we can fork as below and change the overall flow

- circleci -> Jenkins

- dockerhub -> build node builds the container image

- dockerhub -> Nexus 3.0 holds docker repositories

- Docker cloud -> Jenkins deploys to platform of our choice i.e. aws, azure, on-premises, google cloud etc.

Modified Pattern

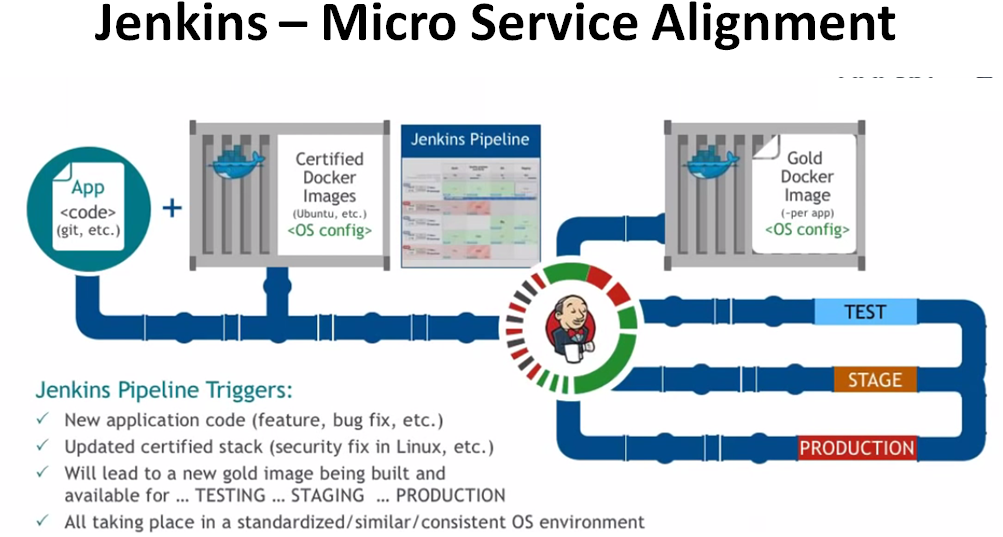

Jenkins Pipeline

We can use Jenkins pipeline that can be used to achieve the below pattern.

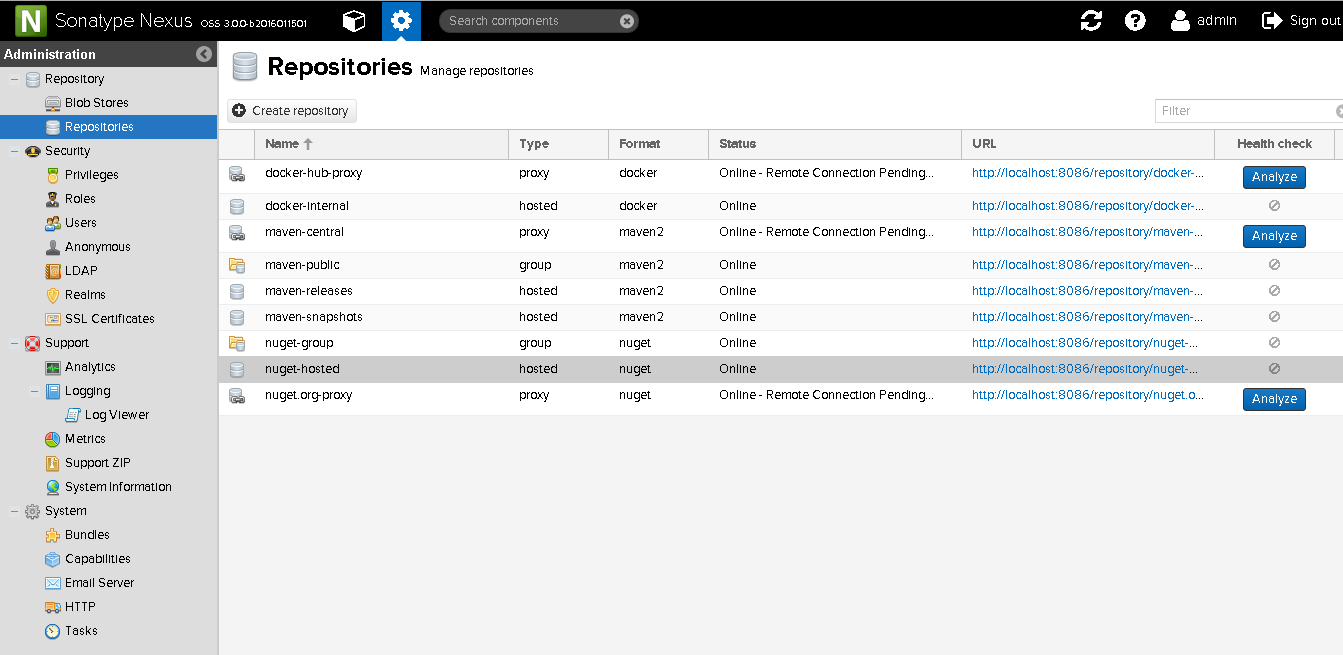

Use Nexus instead of dockerhub

Nexus 3 has support for Docker repos , hence managing a docker image should not be unfamiliar if you knew how to tag, push, pull , build docker images.

How does your existing Jenkins job change ?

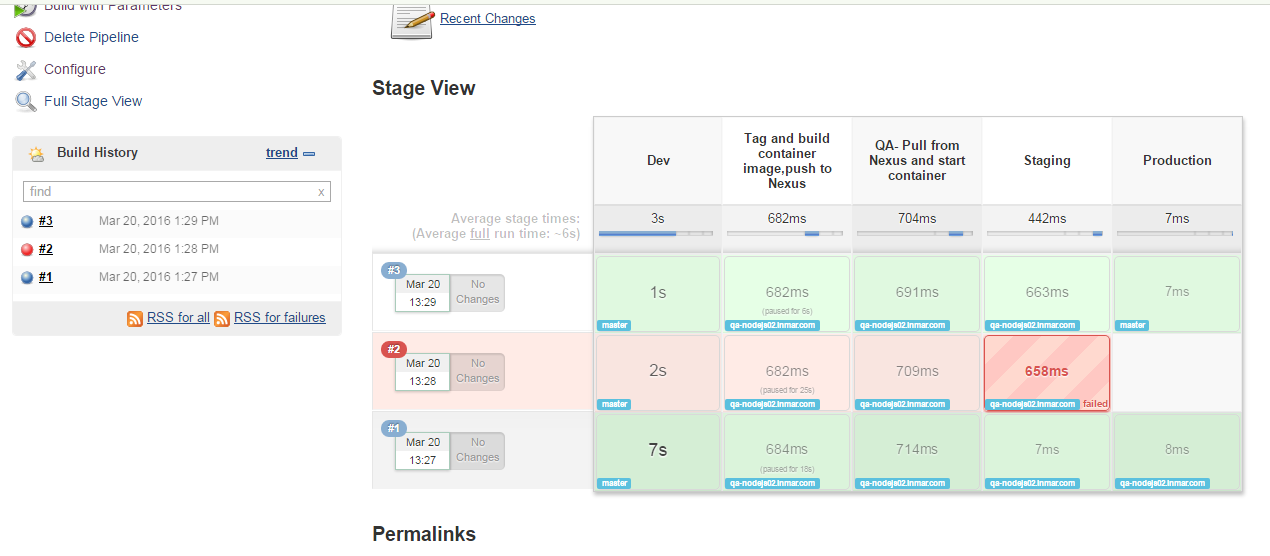

If you use jenkins pipeline, that also shows stage views, then this should be relatively easy. The below pipeline script is an example.

stage 'Dev'

node('master') {

checkout([$class: 'GitSCM', branches: [[name: '*/master']], doGenerateSubmoduleConfigurations: false, extensions: [], submoduleCfg: [], userRemoteConfigs: [[credentialsId: '226656ad-ed4e-4490-aa18-3f87cad77aa4', url: '<SOURCE_URL>']]])

}

stage 'Build & Package'

node('master') {

input message: '\'Do you want to proceed\'', ok: 'Hell Ya!'

sh 'docker build --build-arg username=pradeep --build-arg token=ec3ulgkv2mekoqarv4pzytrh2gdiyuuy5fgqsdnns56e3orxngeq -t "myapplication" -f Dockerfile .'

echo "$NEXUS_REPO"

sh 'env'

sh "docker tag rebates-offer-manager:latest ${NEXUS_REPO}/rebates-offer-manager:${env.BUILD_ID}"

def myval = sh "docker push ${NEXUS_REPO}/rebates-offer-manager:${env.BUILD_ID}"

}

stage 'QA'

node('master') {

sh "docker pull ${NEXUS_REPO}/rebates-offer-manager:${env.BUILD_ID}"

}

stage name: 'Staging', concurrency: 1

stage name: 'Production', concurrency: 1

- It is assumed that you have basic understanding of Jenkins pipeline (that helps to build awesome pipelines)

- Docker engine and client is installed on Jenkins master (of course if you would like to run it on a build node, replace master with that node dns in the above script)

- So Jenkins master will build and tag the container

- $NEXUS_REPO is passed as string parameter to Jenkins job

- It is assumed that Nexus 3.0 is being used that has support for Docker image formats (The Nexus repository manager 3.0 LTS will be coming out by end of March 2016 as per their forums)

- env.BUILD_ID is the jenkins build number, which we will use to tag the docker image and push to nexus

- Once we have the container image available in the Nexus repo, we can pull that down to any environment (QA, STAGING, PRE-PROD whatever)

A Stage view from Jenkins looks like this.

Summary

So now , we have shifted the “compute” and “storage” to our tools like Jenkins, Nexus in case these are the tools, that you already used in your on-premises data center and hence you can utilize those resources effectively too.

Caveat: The discussion on whether this should be a long term solution to you is left in terms of whether you want to stay on-premises OR go in public cloud OR have a hybrid on both – My take would be to NOT get vendor locked-in and build your systems agnostic and abstracted as much as possible from vendor dependencies — In short that means – we should be able to reconstruct our infra/platform/software using source code

Deployment

We can use Jenkins to deploy the containers to our targeted deployment platforms – aws, azure, rack , on-premises etc.

See Jenkins plugins that integrate with each of the above platforms.

Some helpful links:

References://seleniumframework.wordpress.com/2016/03/20/jenkins-nexus-for-micro-service-deployments/

Note:This is accumulated just to prepare knowledge base.

No comments:

Post a Comment